LLM Native Product Capabilities

Ben Thompson likes to point out that early websites were essentially static copies of physical world artifacts like newspapers and brochures. The web only reached its potential during the Web 2.0 era when developers discovered truly native capabilities that previous mediums couldn't match.

For example, the feed's infinite scrolling experience created a new canvas for Facebook and others to display ads. Real-time collaboration breathed life into once-solitary documents, enabling Google Docs, Trello, and others to create virtual workshops where teams create together. On-demand resource access enabled streaming services like Netflix to provide thousands of content options without waiting 3-5 days for a red envelope full of bits.

The emergence of LLMs has opened up new design space for products, enabling features that were previously impossible. Since ChatGPT made its splash in late 2022, we've had about two years of product teams experimenting with LLMs to see what would stick. Today, we are starting to see the shape of LLM-native capabilities that bring real value to users.

Let's explore 6 LLM-native capabilities that have emerged, how they're being used today, and where opportunities remain to deploy them in building better products.

1. Content Generation

Google Search's AI Overview demonstrates how LLMs excel at generating comprehensive content about given topics (even if they occasionally hallucinate details). Companies like Copy.ai and Jasper have embraced LLM-powered content generation to help create marketing materials.

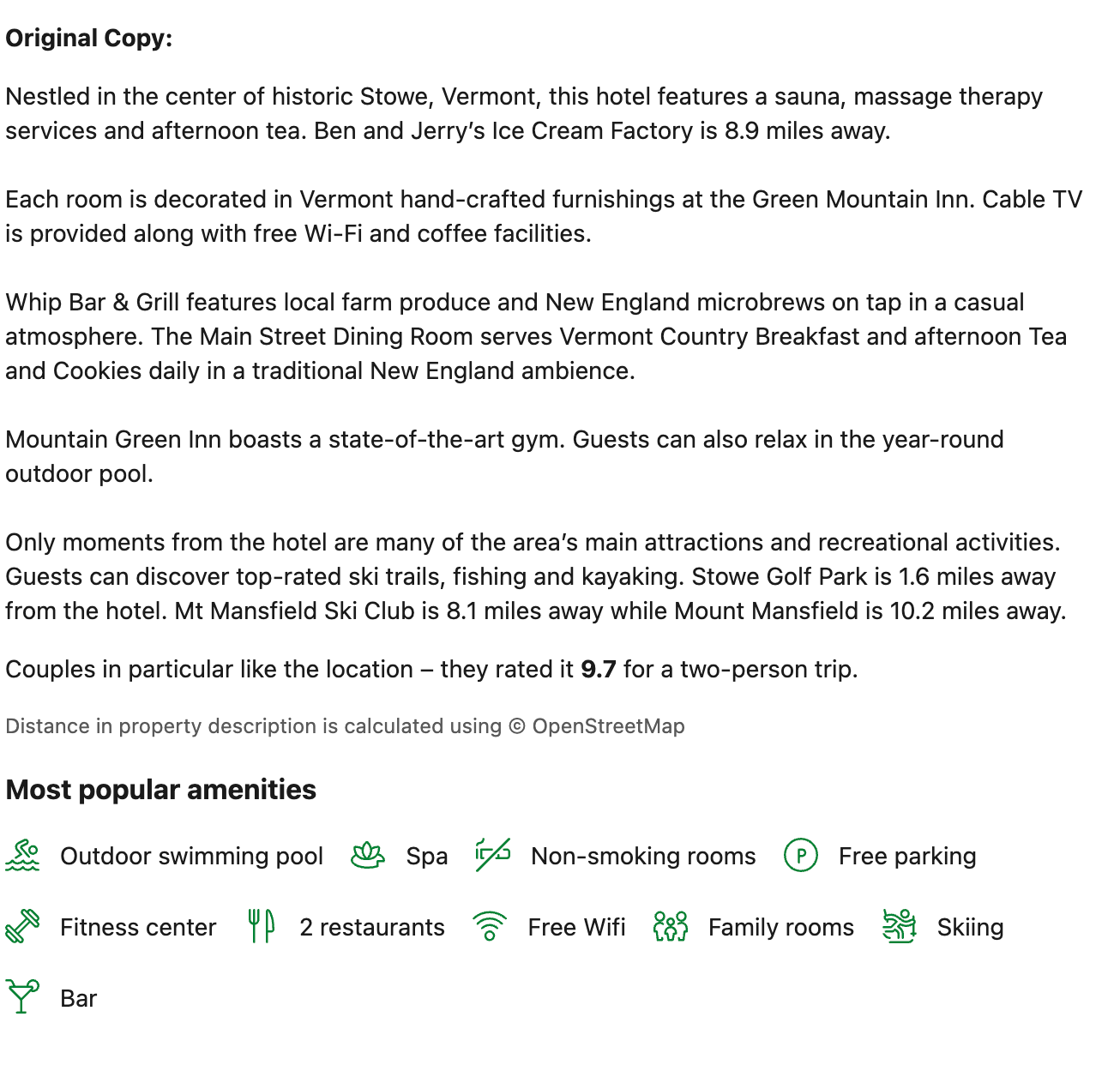

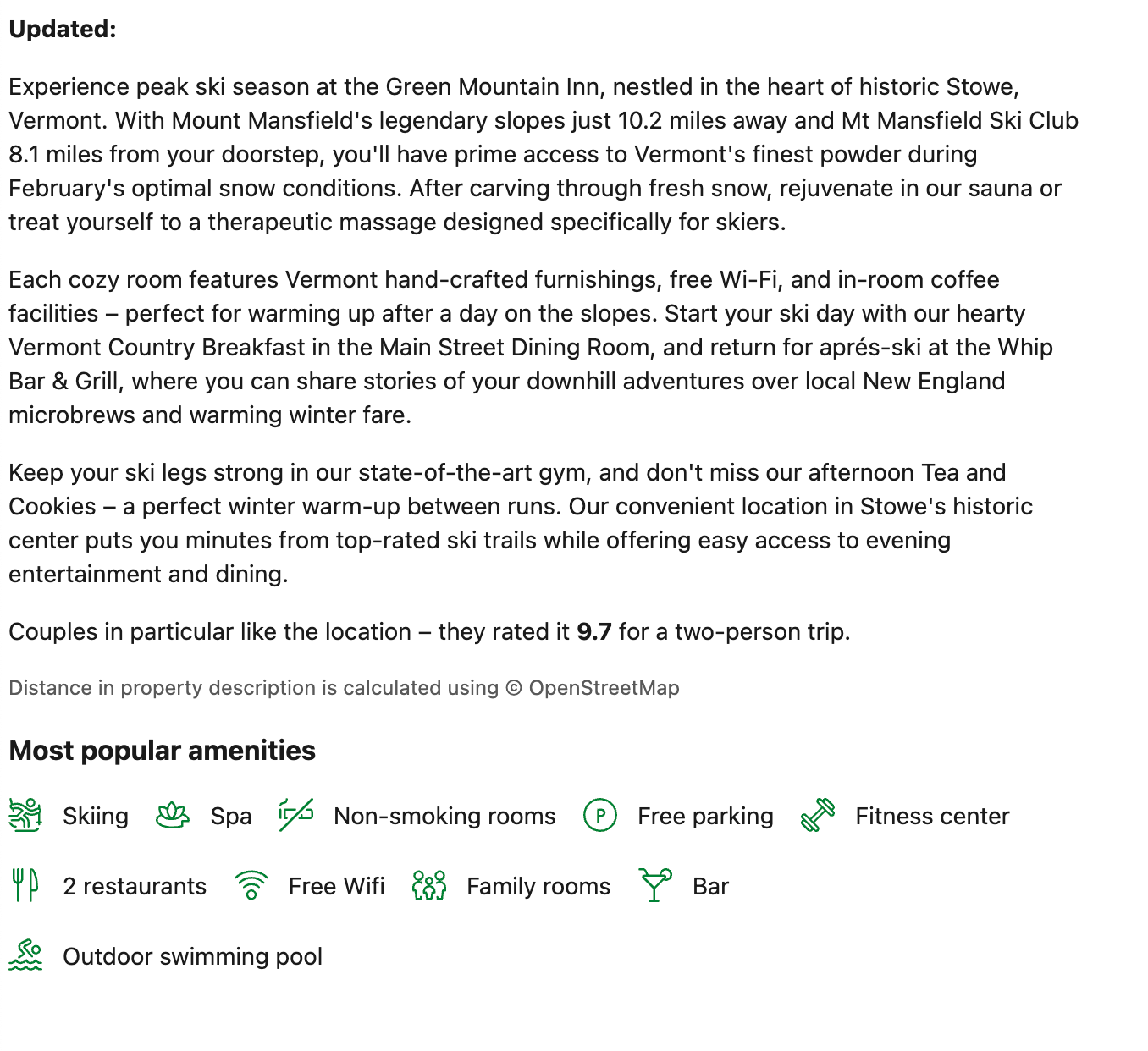

Personalization through memory and context represents one of the most promising frontiers for LLM-powered products. ChatGPT's conversation memory showed us just the beginning, the ability to maintain context and adapt responses based on user history opens up new possibilities for user experience. Imagine hotel listings that don't just filter results, but completely reframe their content based on your preferences: highlighting the quiet workspace for business travelers, emphasizing the kid-friendly amenities for families, or focusing on nearby nightlife for young tourists. This kind of dynamic, personalized content generation could transform how users expect to interact with products across every industry.

If I'm searching for a hotel in Vermont in February, I care about skiing not ice cream.

Personalization extends far beyond individual user preferences. Enterprise tools like Glean are pioneering organization-wide personalization by tapping into company wikis, internal documentation, and proprietary data. This creates an entirely new category of contextual intelligence. Where responses aren't just personalized to you, but to your role, your team's terminology, your company's processes, and your organization's collective knowledge. A developer asking about 'deployment' gets answers specific to their company's CI/CD pipeline, while a sales rep sees information about their team's deployment playbooks.

2. Pattern Parsing

The second key capability is extracting information from unstructured documents. The chat transcript titles on ChatGPT or Claude.ai offer excellent examples of this functionality.

While Google Docs had a pre-LLM version of this (suggesting document titles based on first sentences), it relied on simple context clues. LLMs enable more sophisticated parsing. Imagine automatically extracting the purpose of a SQL worksheet, using the existing select queries to give it a meaningful name like "May 2024 visitor count exploration" instead of "2024-06-05 11:12:03am". That will be vastly more helpful months later when you are looking through your past worksheets to reconstruct a similar query.

Less visible but equally important are the various libraries and APIs that make it easy to extract structured information from LLM-generated content, bridging the gap to traditional programming tools and existing services.

Quick wins in this space could include helping to name scratchpad worksheets, providing context for screenshots in macOS, or upgrading any automatically generated filename (timestamps, hashes or ids) with richer context.

3. Intent Translation

Intent Translation helps users achieve their goals by converting natural language descriptions into precise commands supported by the system. GitHub Copilot exemplifies this, helping developers write code based on descriptions and context. Any tool with a complex query language can benefit from Intent Translation, helping both newcomers and experts become productive faster.

While many focus on how this helps non-experts access complex tools with less training, Intent Translation may prove even more valuable for experts who deeply understand the capabilities and limitations of underlying commands, enabling them to work faster and more easily transfer skills to adjacent domains.

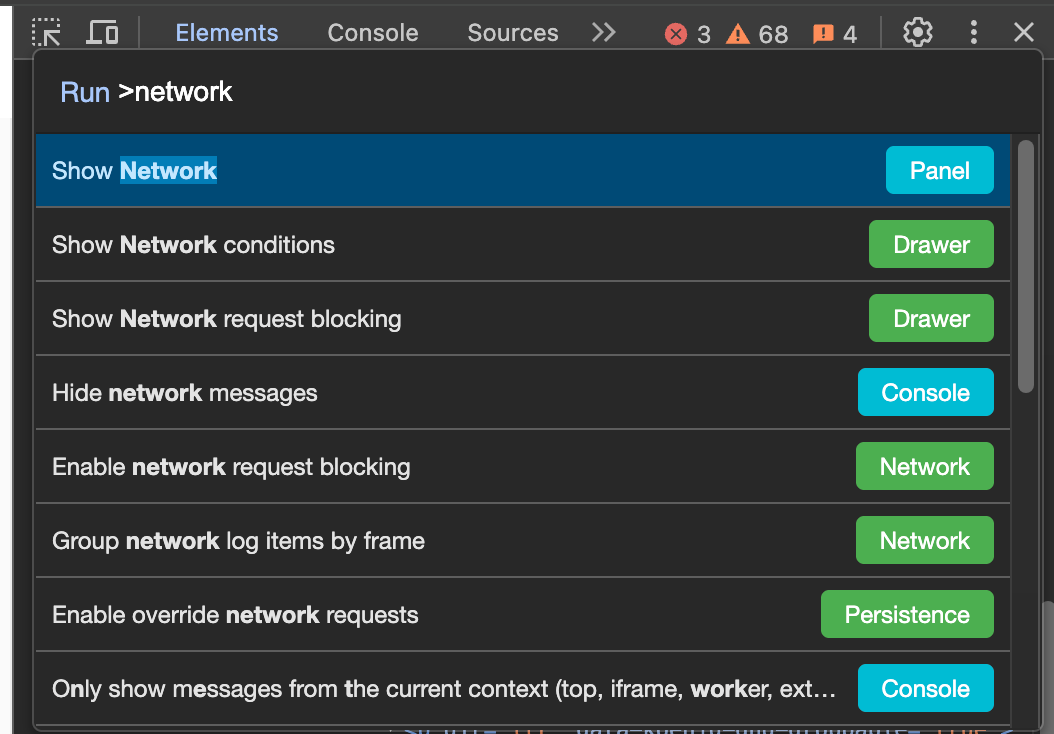

As LLM interfaces become standard for complex business tools, we might see them visually de-emphasized in UIs but always available via quick shortcuts. This could spark a renaissance in command palettes for power users, as the work to expose and document commands for LLMs can be repurposed to provide shortcuts for experts who prefer not to wait for LLM processing.

4. Conversational Interfaces

ChatGPT's explosive success, despite the underlying model existing for six months prior without much interest, parallels early computing history. One-shot LLM generation resembles batch processing, requiring all inputs upfront. In contrast, chat provides all the power of a REPL, allowing users to iteratively refine results and discover possibilities they hadn't initially considered.

Beyond ChatGPT, conversational interfaces have become ubiquitous in customer support and show promise in educational contexts, particularly for interactive tutoring and language learning.

While chat was LLM's first killer app and won't disappear, conversational interfaces may become one of the less frequent LLM-powered features users interact with in the future. This shift could occur as other LLM capabilities, such as intent translation and content generation, provide faster and more seamless ways to achieve results without requiring extended dialogue. The time required for interaction make it difficult to compete with more attention efficient LLM features.

5. Evaluators

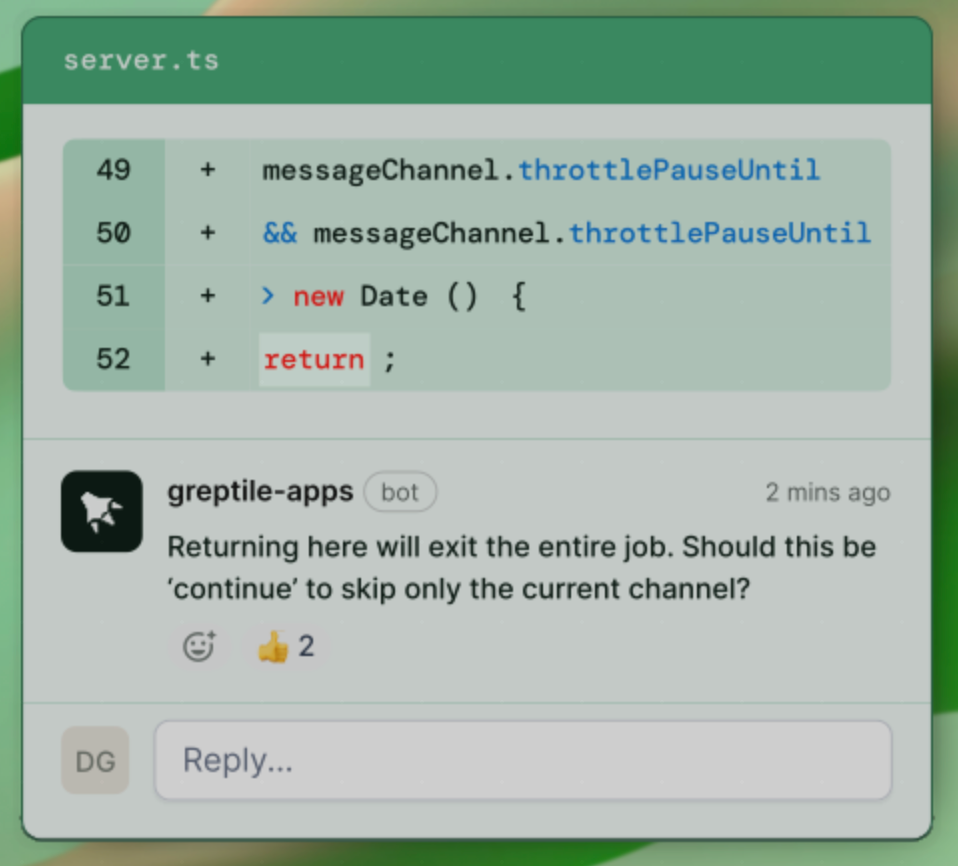

The current generation of LLMs show potential at assessment, comparison, and structured feedback. Given appropriate rubrics, they can even provide scoring or grading. While companies like Greptile.com leverage LLMs for automated code review, others are applying this capability in novel ways. Educational platforms use LLMs to provide detailed essay feedback, recruitment tools employ them to screen resumes against job requirements, and content platforms utilize them to assess user-generated content for quality and policy compliance.

However, modern LLMs aren't always the optimal tool for evaluation. They can struggle with consistency across evaluations, may miss edge cases, and often require significant prompt engineering to maintain reliability. While they can serve as an interim solution, many evaluation tasks may ultimately be better handled by deterministic algorithms or dedicated ML models trained on specific evaluation criteria.

6. Agents

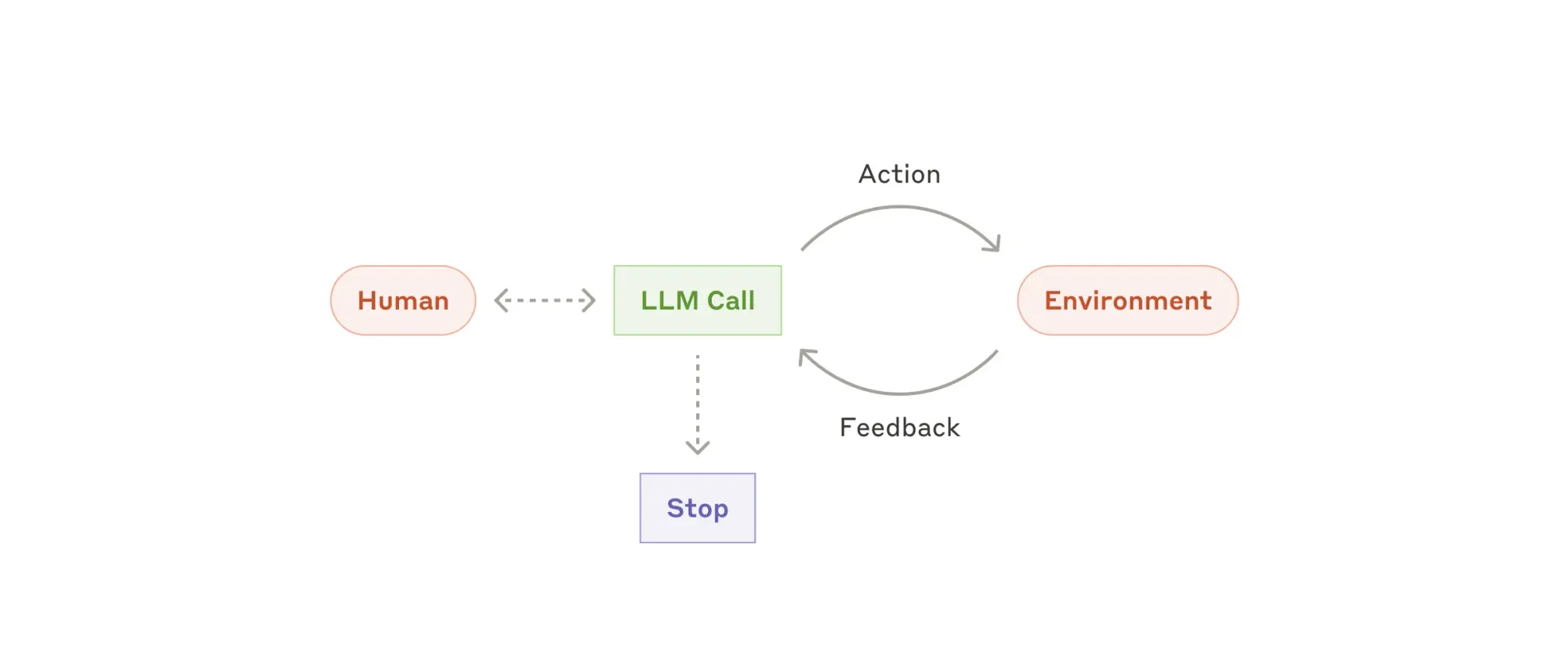

Agents combine many of the capabilities above into systems that dynamically direct their own processes and tool usage to accomplish tasks. They're gaining significant attention in 2025, and I expect substantial product growth as teams become more familiar with AI capabilities and grow more ambitious. Anthropic has published useful documentation on techniques for building effective agents and advanced workflows.

Looking ahead, I'm particularly excited about personal assistant agents that can automatically follow up on meeting action items or proactively invite relevant people into incident Slack channels based on context.

The Path Forward

As we witness these native LLM capabilities mature, we're seeing an evolution from simple text generation to sophisticated features that enhance existing products and enable new ones. The most successful implementations share a common thread: they don't just replicate human tasks but augment human capabilities in ways that weren't previously possible.

For product teams, the challenge isn't just implementing these capabilities, but identifying where they can provide real value instead of novelty. The most successful products will be those that subtlety use LLMs to solve problems on top of the existing web platform capabilities.

As we move further into 2025 and beyond, we'll likely see these capabilities become more refined and specialized for specific domains. The winners won't be those who simply add LLM features to existing products, but those who reimagine their products with LLM-native thinking from the ground up, just as the most successful web products weren't digitized magazines, but entirely new experiences that leveraged the web's unique capabilities.